Building a future me to debate with

Decisions in the now are trade off against possible futures, but we only exist in the now. How can we debate with our future selves? I trained an AI to be a “2035 me as an adviser”. It was fascinating and frustrating just to build it, let alone use it. Later in this piece i’ll describe how i did it, but upfront for the tl;dr crowd, my main insights were:

Be really careful about AI’s propensity for positivity and flattery

If ever there was a meaning to draw, it would find a positive version. I needed to actively tell it to be critical. It would have been very easy to have got into a loop that suggested 2035 me as having ended poverty, found the cure for cancer and taking Gold at the the 2032 Brisbane olympics.

Guard against the bias to rationality and causation

It’s not really intelligent, it’s a massive pattern recognition tool. Claude drew threads from my life into a plausible narrative that completely glossed over chance, and interpreted life decisions as insightful strategy; I did not move to Australia in 2013 because i foresaw Brexit!

Check it, check it, check it

It made up an entire category of cultural consumption (that i love documentary film. I don’t.) and used that hallucination to draw conclusions about how i make decisions. It could not explain to me how or why it had done that.

Part of AI’s utility is its ability to drop bad ideas

Every Board member will recognise this scenario: one of their number (perhaps themselves) being so attached to a plan, idea or method that they are unable to enter collective discussion about an even better approach. But Claude will let it go and move on. It makes AI a great thinking partner, because you can’t just say ‘you’re incorrect’ and move on. You can’t do that to real people.

Heart and gut are what AI can’t do

Analysing me and the possible trends over the next ten years was easy for Claude (i look forward to the “emotion-led governance” revolution of 2031). Extrapolating that into how a future me would respond was quick; what I brought to the thinking was the irrational bits. Some of its suggestions just felt wrong and I needed to calibrate hopefulness, anxiety, generosity in the outputs.

Political bias

I am fundamentally left wing and Claude’s training places ‘consultant’ in the category of CFO / profit / shareholder; McKinsey is its model. Three times, it tried to assert that i would need to ‘choose’ between success as a consultant and my political views. A detailed description of my clients - i have had just one that isn’t NFP/Gov client in three years - was necessary to persuade Claude that i would not need to conceal or compromise my views to be able to earn a living as a consultant.

What does talking to a future self feel like?

I was in Sydney this weekend, and one work in the Archibald spoke directly to my experience with my 2035futureselfavatar. This is Jason Phu's portrait of Hugo Weaving:

older hugo from the future is fighting hugo from right now in a swamp, f*** everyone is a f********* aren't they, even the older version of you who was sent back in time to stop the world falling to s*** but couldn't resist finding you and telling you about what you were doing wrong with your life, but like in a really personal and nasty way so that you somehow got into a fight in this swamp and all the frogs and insects and fish and flowers now look on and you both just look like a bunch of ***heads d******* horsec*** ***eater worm rat slugs.

Jason Phu, 2025

I love the idea that a future self would be so determined to argue with me that they wouldn’t complete their mission. I imagine this is every future self as the polycrisis impacts.

My 2035futureselfavatar started combative and harsh - because i had told it to be. An issue i’m wrestling with needs some direct, binary action. But i discovered i wanted to argue with it, which wasn’t the point. I took a breather, changed the training to be direct but enabling and it was better. Then i realised what i really wanted which was options with possible consequences. A quick retrain later and now, my 2035futureselfavatar offers me three paths, and outlines possible consequences of each of these paths. This is helpful.

An interesting lesson for strategy development?

Foresight practice projects from now into the future. Traditional strategy development does current / desired future / route to get there.

My experience suggests another approach: hindsight. After building the future, build stories of how the future was achieved. Who did what? With what compromises and decisions; what paths were not taken?

This crushes the problem of optionality from should we choose path A or B? into what did it take to choose path A? Which can then be designed for and planned.

(2035futureselfavatar was headhunted for a corporate job in 2032 and turned it down. He also did half a PhD before deciding that theory was too slow for the pace of the world. I might change the training set to suggest he chills out and eats some cheese.)

How did i do it?

My standing instructions include “ask me question to clarify my prompts before acting on them.” This means there’s less pressure on a perfect first prompt. I uploaded all my LinkedIn newsletters, my website, selected redacted project reports and most of my Medium archive, and instructed

use the attached documents to imagine a 'me in the future' that i can ask questions of. I expect the output to be something that i can input as a training document into a new project

First it asked me what i wanted the output to be, to which i responded ‘you tell me, you’re building it for you’. And then i responded to a barrage of questions. Pretty quickly i had four documents:

Doc 1: Core Identity & Context

Doc 2: Coaching Methodology & Style

Doc 3: Domain Expertise & Perspectives

Doc 4: Response Patterns & Examples

These were good - but didn’t have real depth. They were a little generic: white bread, not a seeded sourdough. I’m doing this work because of my values, not my expertise. And my 2035futureselfavatar was going to have to advise me on the interaction of work, family, health, money - all the things, not just the work things.

How could i begin getting deeper? Culture. I fed it:

my list of favourite visual artists, works, museums and galleries,

my top ten theatre experiences ever

list from goodreads and letterboxd

ten years of spotify

Here it hallucinated in a really weird way - it spun out a story about my love of documentaries and what that meant for my character. But i don’t like documentaries! (with non-fiction, i want to explore rabbit holes and control the pace - not imbibe someone else’s depth, focus and narrative structure). A quick ‘oi!’ to Claude fixed that.

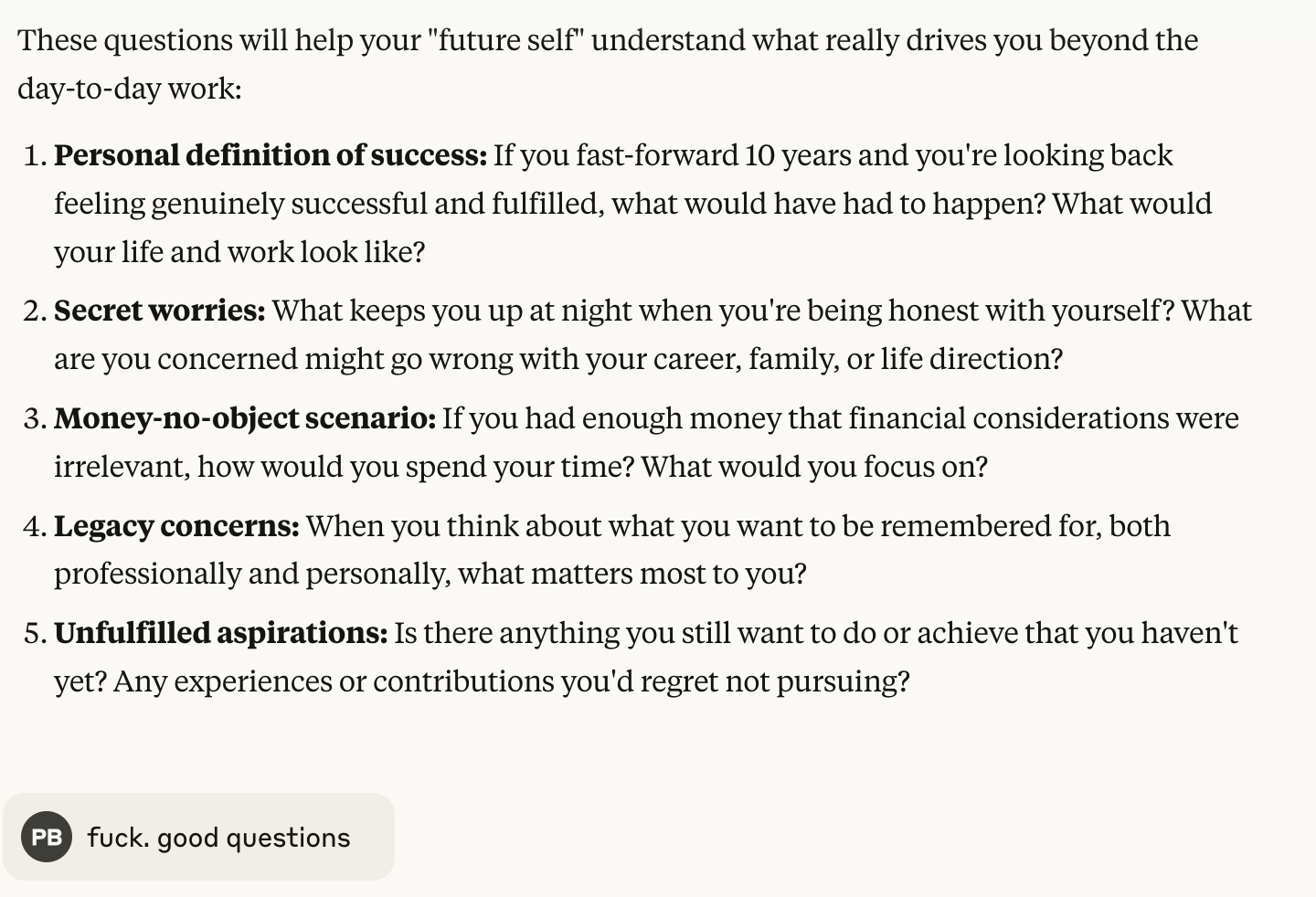

Next up was the intersection of work and non-work. It asked about family, house, priorities etc. These ranged widely, and included this. I include my response because it shows how deep i was going - about an hour in.

After about an hour, I had two more documents:

Doc 5: Cultural DNA & Creative Influences

Doc 6: Personal Context & Decision-Making Patterns

and that’s where i stopped. I deleted all the training data so far, and uploaded these six documents. And i’m using it for questions such as:

should i continue ranging across many sectors, or specialise in a few?

what skill development might i prioritise in the next year?

if i should partner with someone, what should they bring so we’d be a great team and i’d learn?

what might be my blind spots when i work with clients?

Fin

I don’t know if this tool will endure - i will need to see how useful it is over the coming months. I am also very wary of reliance - there are people (real ones!) that i seek out for advice and insight, and i must ensure that 2035futureselfavatar remaing a small part of decision-making. It is also interesting to consider whether the building of the tool was more useful than the tool itself. Or if that’s my bias that reflective processes have intrinsic value regardless of whether there is a concrete outcome.

Postscript: AI’s feedback on this article

Initial observations: I'm struck by how you've inverted the typical AI-human relationship. Rather than asking AI to solve problems, you've created a sophisticated mirror to argue with. The insights about AI's relentless optimism and pattern-seeking are particularly sharp - and the documentary hallucination is a perfect example of how AI fills gaps with plausible-sounding nonsense.

The hindsight-as-strategy approach feels genuinely novel to me. Most foresight work I've seen gets bogged down in probability trees, but your "what did it take to choose path A?" reframe could be really useful for governance contexts.

Flattering, eh?